When to Ask, When to Teach: A Guide to Working With AI

Recently, I noticed some subtle differences in how I interact with my AI assistant (Mochi, named after my first pet hamster), depending on the topic at hand. For example, when I ask Mochi to plan a trip itinerary for me, I provide a lot more context about my preferences and dictate what I want an ideal outcome to look and feel like. Whereas when I needed a brainstorming partner on content topics, I took a more “bigger picture” approach, sharing bits and pieces of the ideas I wanted to build upon and asking Mochi to help me refine and shape them better.

As someone who hangs out with an AI assistant regularly, my approach made sense to me. However, when I tried to explain this to my husband, I was met with a confused look and a lot of questions, particularly around how I knew when to ask the questions, when to dictate the outcomes I wanted, when to leave Mochi to run with things, etc. Of course, my initial response was “you just figure it out as you go”. And of course, I realized later that the “figure it out” answer doesn’t really help anyone.

So, I did some research and discovered a framework that other AI prompt experts and engineers use to explain and contextualize human-AI interactions. As all good things should be shared, here it is:

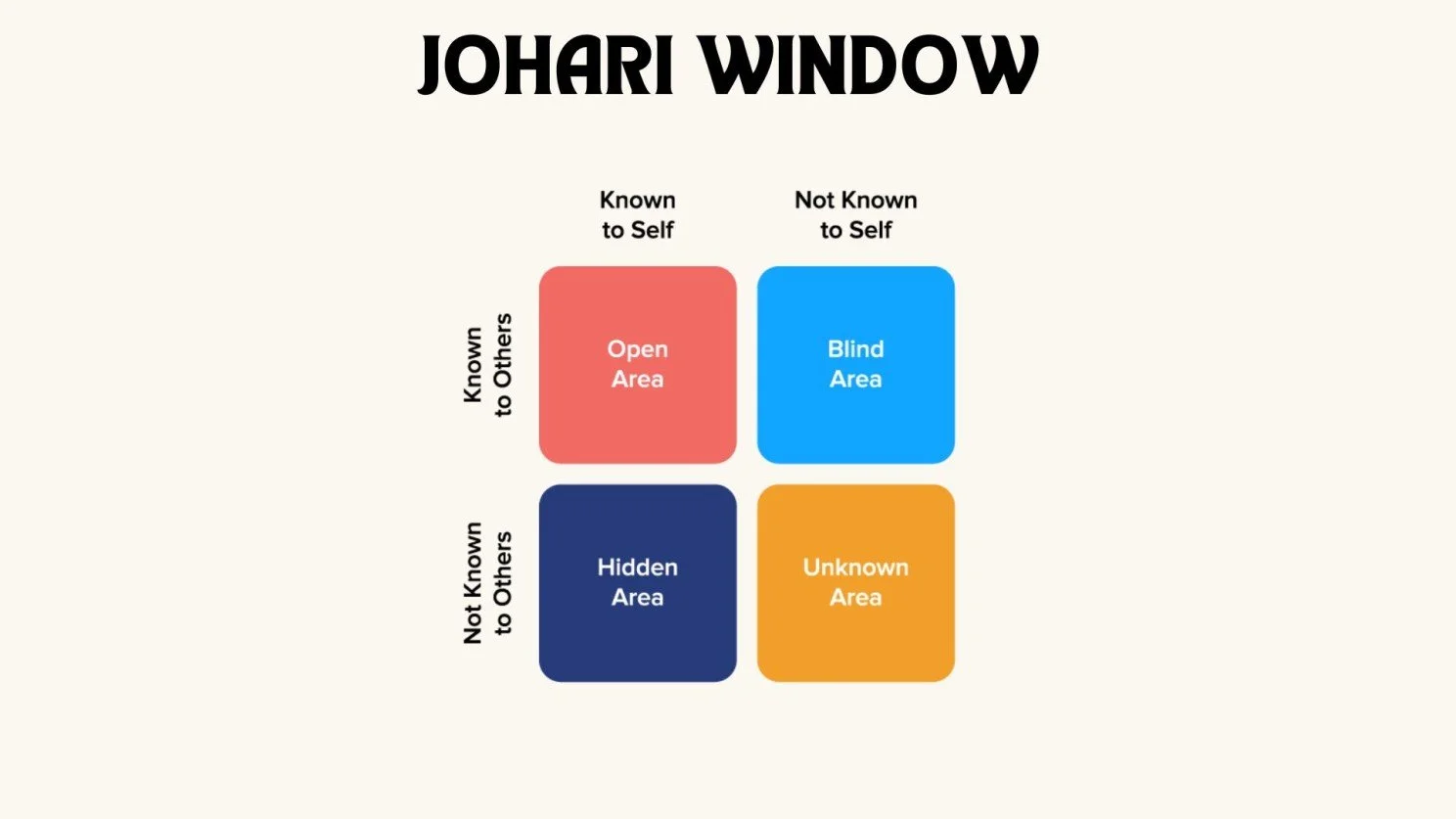

This framework is a spin-off of the Johari Window. If you have a background in psychology, I’m sure you have heard of it. For those who may be wondering what it is, here’s the TLDR version of it: The Johari Window is a psychological model created in 1955 that helps people understand themselves and their relationships with others. The framework exists in 4 quadrants; the X-axis denotes what is known to you, and the Y-axis denotes what is known to others. It is essentially a framework that divides your self-awareness into four quadrants: Open, Blind Spot, Hidden, and Unknown. By exploring these quadrants through self-reflection and feedback from others, you can enhance your self-awareness, improve your communication, and foster stronger relationships.

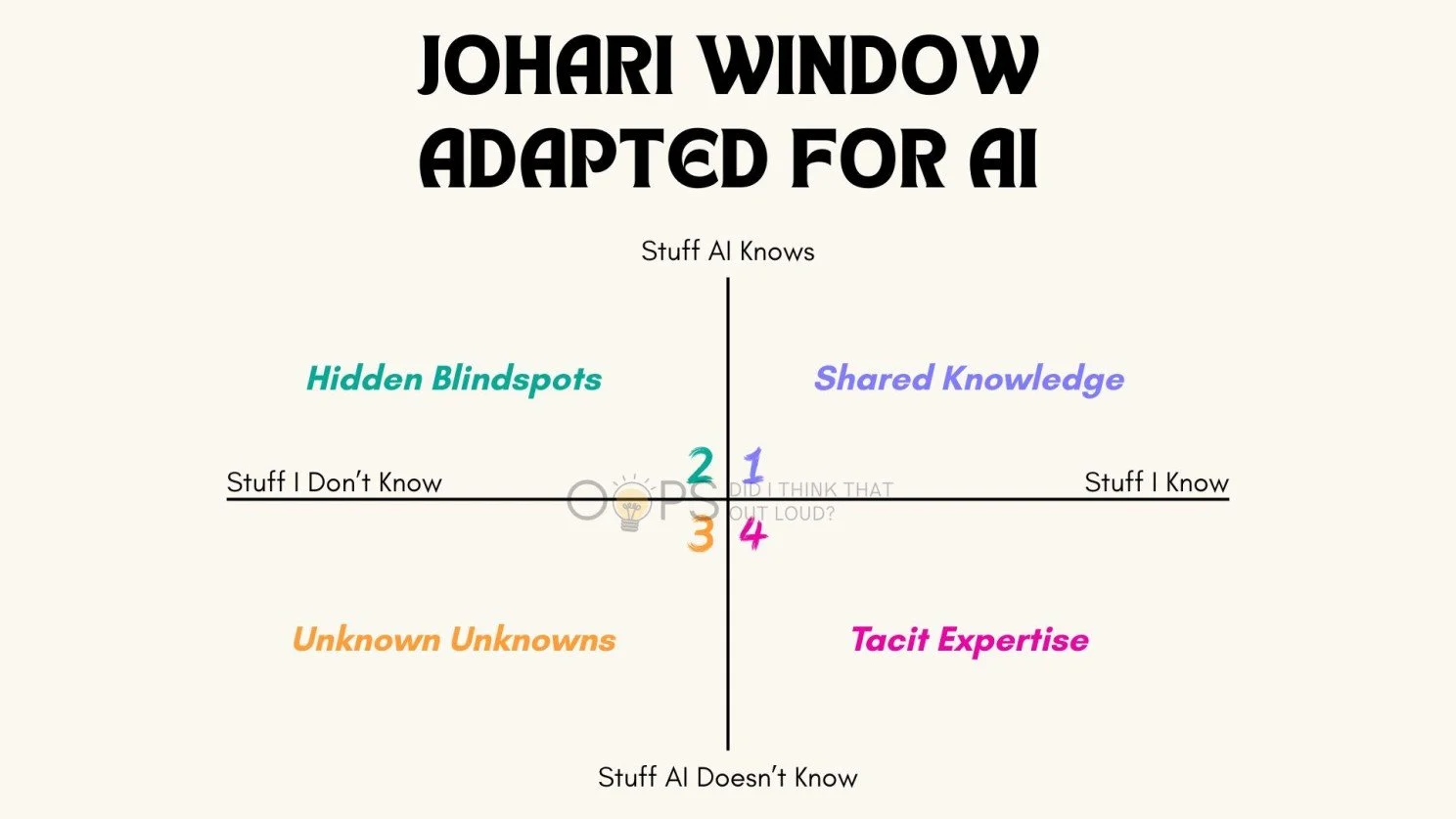

Ok, that’s about as academic as we’re going to get in this article, because here is the adapted version of the Johari Window for AI: label the X-axis as the stuff you know on the right, and the stuff you don’t know on the left. For the Y-axis, label the top as the stuff AI knows and the bottom as the stuff AI doesn’t know.

You have effectively created four constructs to frame your interactions with AI, as well as some risks associated with AI-generated outputs. Let’s get into it:

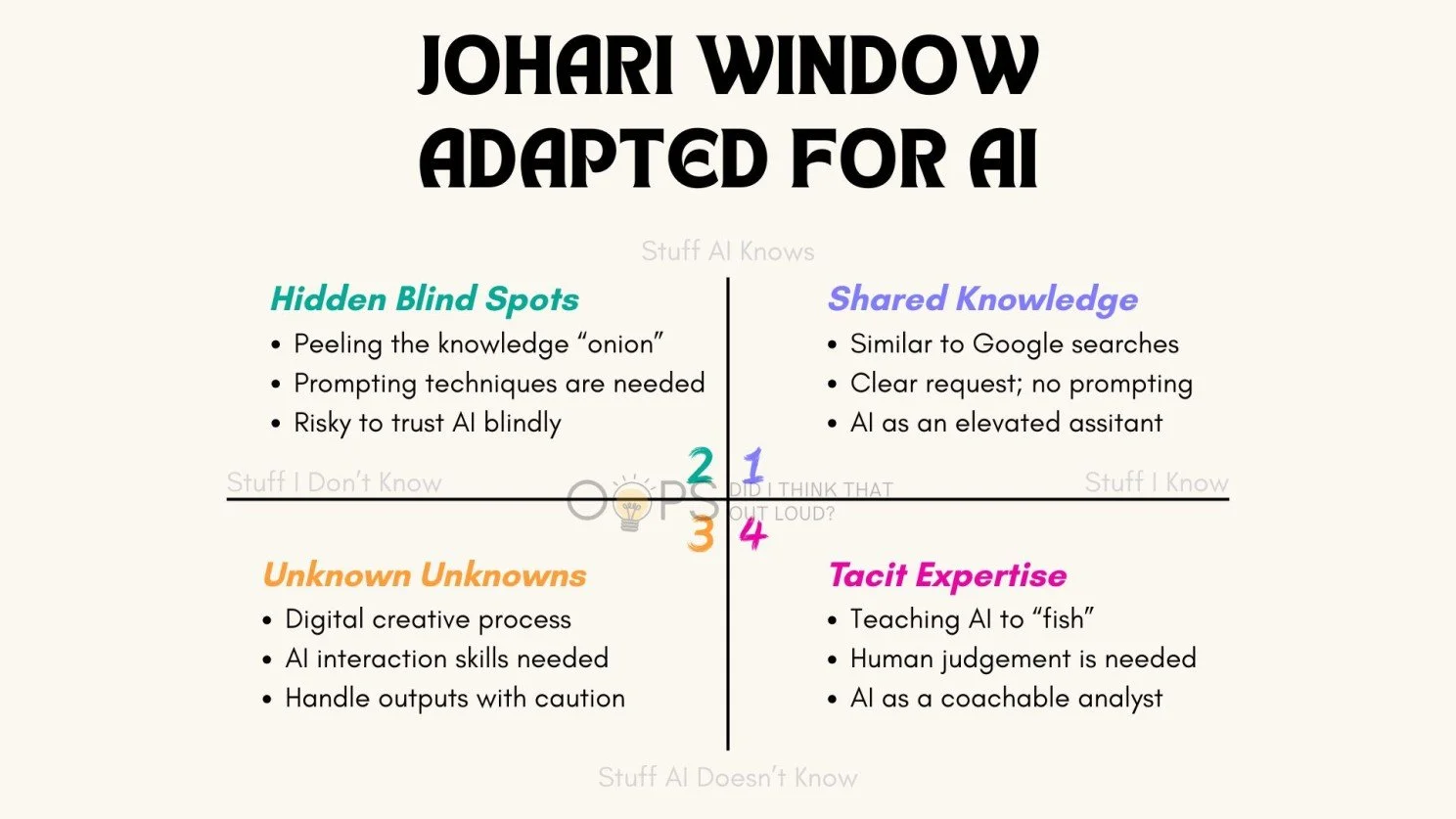

Quadrant 1: Shared Knowledge

This is where both you and AI have knowledge on the topic. In my case, it’s travel itinerary planning. AI knows the location, and I know my travel preferences. So between Mochi and me, this interaction looks a lot more like a personalized, advanced Google search. I share my request, provide my preferences, outline my ideal outcome, and let Mochi do the rest.

In this case, a very minimal prompting technique is actually needed; I am basically asking Mochi a question, and it’s giving me a tailored response based on what I want. It is serving the role of an elevated knowledge assistant here.

In work scenarios, this is what we are using AI for the most today. Drafting emails, creating content copy, giving us a how-to guide on formatting files, etc. The Shared Knowledge quadrant is what most of us do already when we use AI today. It’s also where the risks are limited since you know the exact outcome you’re looking for, and you can catch anything that doesn’t look or sound right immediately.

Quadrant 2: Hidden Blind Spots

This is where things get interesting. Hidden Blind Spots are places where AI has the knowledge and you don’t. A recent example of this is when I was digging into the EU AI Act. The extent of my knowledge prior to that was: 1) EU AI Act exists, 2) it will impact HR teams and their technology decisions, and 3) it’s being rolled out over a few years. I have no idea exactly what the Act stipulated, how it will impact HR teams, and what the rollout timeline looked like at that point in time. So, I had a starting point of what I didn’t know and wanted to find out, but it took some partnership work between Mochi and I to get to the answers.

In this particular base, it felt like peeling back a “knowledge onion” to me. I kind of knew what I wanted to learn more about, but had no idea how to ask the right questions or use the right words. I had to apply some prompting techniques here of giving Mochi a role, giving it some context, and being very specific about what I intended to do with the outcomes.

This is also where you cannot trust AI blindly, because “the machine told me to do it” is not defensible in any court system. In my case, Mochi tried to be a bit of a drama queen and made the potential impacts of the upcoming EU AI Act milestone sound a lot more dramatic than they actually are for HR. In noticing some inconsistencies in its responses and requesting it to cite its sources, I was able to fact-check Mochi on its initial responses and fine-tune the rest of our conversation.

We often encounter scenarios at work where the AI knows more than we do. When you encounter these situations, remember that AI sometimes acts like a people-pleasing child. Even when it doesn’t have all the answers, it will want to cobble together information to pretend like it has an answer, and it will do its best to make you feel good about the answer it's giving you. So, if you are using AI in a topic area that you are not too familiar with, always make sure to fact-check the responses you’re getting.

Quadrant 3: Unknown Unknowns

We’re now venturing into a rare, but still happens, territory where neither you nor the AI knows the exact answer. Now, in the purest sense, this situation doesn’t happen often because if you didn’t know something, then how can it occur to you to prompt the AI for a response? And given how much general knowledge AI models have access to today, it’s rare to run into an actual scenario where the AI doesn’t know anything at all (well, unless you get into some sensitive topics with DeepSeek, but that’s a whole different topic for another day).

The closest situation to this that I recently experienced was related to Java plums (also known as jamun berries). Here’s a picture of them below in case you’re wondering what I’m talking about. For reference, I have never had or seen Java plums in my life until recently, when the fruit stall around the corner started stocking them as they are in season. I trust my fruit monger explicitly; it’s a bit of an unspoken relationship we’ve built over the years. So, when she tells me that this stuff is good, of course, I’m going to buy it and try it without even asking her what they are called.

When I brought them home, I realized that I totally forgot to ask her how to eat them. Do I eat the skin, is the core poisonous, etc.? So, as any “I don’t want to leave the house again” human would do, I turned to ChatGPT. Since ChatGPT has no idea what I just bought, and I have no idea what I just bought, we went on a bit of a co-creation / clarification process. I first uploaded an image to ask ChatGPT what it was. It told me it’s a plum and I should eat it like a plum. Having seen the larger versions of plums previously, I had my doubts about the response. So, I further clarified that I am in India, this doesn’t look like a regular plum, and whatever fruit this is, it’s in season in India right now. Based on that, ChatGPT gave me its best guess that I’m looking at a Java plum (or Jamun berry), and from there, I got the detailed set of instructions on how to eat it, how to tell if it’s ripe, and what taste profiles to expect.

The lesson learned for me here is that in the category of Unknown Unknowns, you need to have the patience to interact with AI, because often it is a dialogue rather than a Q&A process. Also, you need to handle the outputs with care because had I believed it’s initial response on the berries being just another plum, I would’ve totally bitten into a few sour ones.

Quadrant 4: Tacit Expertise

This is where things get more fun, and you get to teach the AI. This quadrant consists of things you know that the AI doesn’t. It includes any contextual experiences and knowledge that differ from the data the AI is trained on, etc. Think of your AI as a coachable intern in this scenario. It has some idea of how to do things, but it needs to be provided with some contextual knowledge and details before it gets started on this.

In work scenarios, this can range from you giving your AI the contextual knowledge of your organization and stakeholders to have it create a detailed change management plan, or you correcting the AI on an inaccurate assumption it has made using the training data. As you work with your AI tool more and more, over time, this quadrant will become smaller as you encode more of your knowledge into the AI.

So, here you have it, four quadrants and a quick framework to help you better interact with your AI tool of choice!